Let’s start with a scenario that every GRC analyst has lived through.

The Real-World Disconnect Imagine you are onboarding a new SaaS provider, “Vendor X.” You send them your standard SIG Core questionnaire (all 300 rows of Excel). Three weeks later, they reply.

- Question 42: “Do you patch critical vulnerabilities within 7 days?”

- Vendor X’s Answer: “Yes.”

You mark them as “Compliant” and approve the contract. Two months later, Vendor X suffers a massive ransomware event. The cause? An unpatched internet-facing server that had been vulnerable for 90 days.

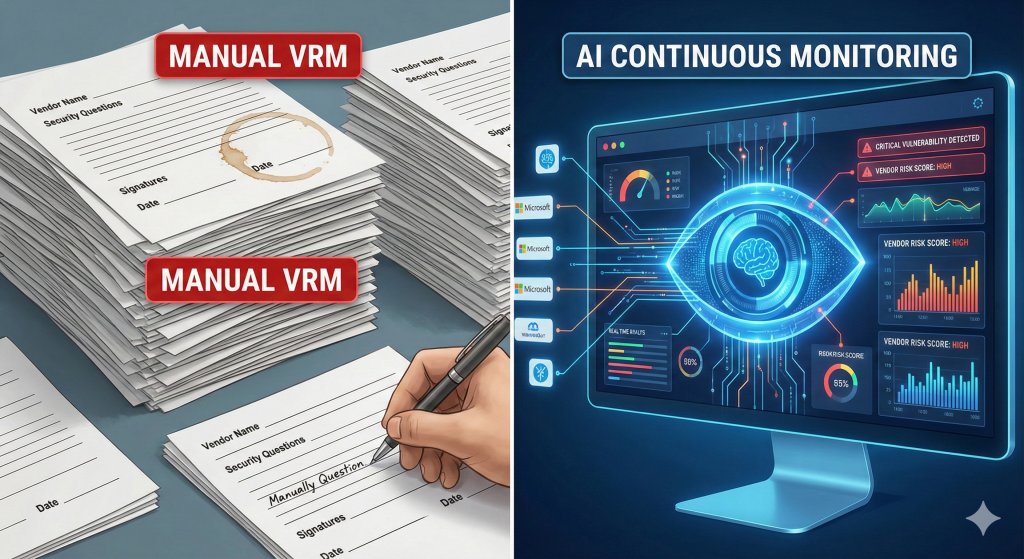

What went wrong? The questionnaire didn’t lie, but it didn’t tell the truth, either. It measured policy (what the vendor wrote in a document), not reality (what was actually happening on their network). The questionnaire was a “point-in-time” snapshot that was obsolete the moment it was emailed back to you.

For decades, Vendor Risk Management (VRM) has relied on this “trust but verify” model via spreadsheets. But in an era where supply chains are massive and zero-day exploits happen overnight, this model is broken. As we head into the new year, we must pivot to AI-Driven Continuous Monitoring.

Scaling the Unscalable

The fundamental problem with VRM is math. If you have 500 vendors, and it takes 4 hours to review a SOC 2 report and questionnaire, you need 2,000 man-hours just to do the basics. You cannot hire enough analysts to keep up.

AI changes this equation by automating the drudgery, allowing your team to focus on the decision-making. Here is how the technology is reshaping the three pillars of VRM.

1. Automated Document Analysis

Reading a 120-page SOC 2 Type II report is tedious. Finding the one “Qualified Opinion” or the three “Exceptions” buried in Section 4 is difficult.

Modern Large Language Models (LLMs) are revolutionizing this. You can now feed a SOC 2 PDF into a private AI instance, and within seconds, it can:

- Extract all noted exceptions.

- Summarize the auditor’s opinion.

- Map the vendor’s controls directly to your internal security policy.

- Flag inconsistencies (e.g., “The report says they use MFA, but the exception in Section 4 notes a failure in MFA implementation”).

2. Continuous Passive Scanning

While questionnaires ask “Are you secure?”, AI-driven passive scanning looks at the internet to see if they are secure.

These tools scan the vendor’s public-facing infrastructure 24/7/365. They look for:

- Open ports and exposed databases.

- Expired SSL certificates.

- Evidence of compromised credentials on the Dark Web.

- DNS misconfigurations.

If a vendor’s risk score drops overnight because they spun up an unsecured server, you get an alert the next morning—not when their contract renewal comes up next year.

3. Predictive Risk Analytics

The next frontier is using AI to predict vendor failure before a breach occurs. By analyzing vast amounts of non-security data—financial reports, sudden leadership changes, layoffs, or lawsuits—AI models can predict operational instability.

Often, a company cuts its security budget after it runs into financial trouble. Predictive AI warns you that a vendor is becoming unstable, allowing you to prepare for a disruption or a security lapse before it happens.

The Human in the Loop

Does this mean the GRC analyst is obsolete? Absolutely not.

AI is a tool for triage. It clears the noise. It tells you, “These 400 vendors are fine, but these 3 have critical issues.” Your job shifts from being a “spreadsheet chaser” to a “risk decision-maker.” You stop spending your time gathering data and start spending your time acting on it.

Discussion

- Trust Issues: Do you trust “passive scanning” scores (like SecurityScorecard or BitSight) enough to make a contract decision, or do you still require the questionnaire as the primary source of truth?

- The AI Fear: Is your organization allowing you to use AI tools for summarizing confidential documents like SOC 2 reports, or are privacy concerns blocking adoption?

Leave a Reply